Publication 2 April 2025

10 AI Governance Priorities: Survey of 40 Civil Society Organisations

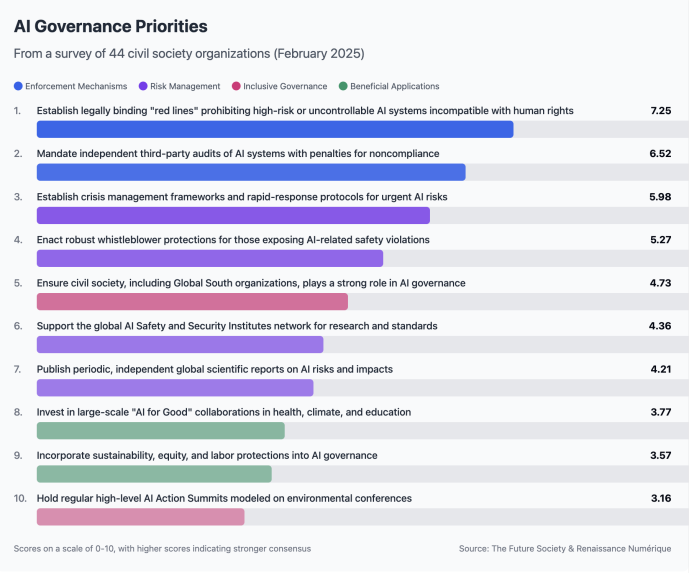

To identify the most urgent governance interventions for 2025, Renaissance Numérique, in partnership with The Future Society, conducted a prioritisation exercise following the Summit. Building on the Summit’s global consultation of 11,000+ citizens and 200+ experts, we co-organised a structured workshop on the margins of the Summit, where 61 participants mapped critical governance gaps. We then surveyed 44 civil society organisations – including research institutes, think tanks, and advocacy groups – to rank ten potential governance mechanisms. Though limited in scale, this sample offers an indication of emerging civil society consensus.

The survey responses showed strongest consensus around: (1) establishing legally binding “red lines” for high-risk or uncontrollable AI systems, (2) mandating independent third-party audits with verification of safety commitments, (3) implementing crisis management frameworks, (4) enacting robust whistleblower protections, and (5) ensuring meaningful civil society participation in governance.

Our analysis organises these priorities into a four-dimensional roadmap: enforcement mechanisms, risk management systems, inclusive governance structures, and beneficial applications. This roadmap provides actionable guidance for key governance initiatives including the upcoming India AI Summit, the OECD AI Policy Observatory, UNESCO’s AI Ethics initiative implementation, the UN Global Digital Compact, and regional frameworks such as the EU’s GPAI Code of Practice. It also offers civil society organisations a shared snapshot of priorities for advocacy and strategic coordination. Policymakers and civil society working together to implement these priority mechanisms will create a foundation for making AI safer, more accountable, and more aligned with the public interest.

Background and Methodology

The survey and its results analysis build heavily upon insights gathered through a structured consultation process on AI governance priorities:

Make.org, Sciences Po, AI and Society Institute, and the French National Digital Council (CNNum) conducted a global consultation for the AI Action Summit, gathering input from over 11,000 citizens and 200+ expert organisations across continents. This established key areas of concern including AI risk thresholds, corporate accountability for commitments, and civil society inclusion.

Phase 1: Paris AI Action Summit Side Event (February 11, 2025)

Building on the global consultation findings, an official side event titled “Global AI Governance: Empowering Civil Society” was held during the Paris AI Action Summit. This event was co-organised by Renaissance Numérique, The Future Society, Wikimedia France, the Avaaz Foundation, Connected by Data, Digihumanism, and the European Center for Non-for-Profit Law. During the event, the results of the Consultation were presented. 61 participants identified governance gaps and submitted concrete proposals to fill those gaps via an interactive polling platform. These submissions were then synthesized into 15 potential governance priorities, enabling real-time consolidation of diverse inputs.

Phase 2: Priority Ranking Survey (February 15-25, 2025)

Renaissance Numérique and The Future Society consolidated the 15 initial priorities into 10 for easier voting. The ranking survey of these priorities was distributed through multiple civil society networks and professional channels to ensure broad participation, with 44 organisations ultimately responding. While participation was anonymous, all respondents self-identified as representatives of civil society organisations, think tanks, or academic institutions with expertise in AI governance.

Scoring Methodology

The prioritisation used a weighted ranking system where the highest-ranked items received 10 points, second-ranked received 9 points, and so on. Final scores represent average points across all organisations, ranging from 3.16 to 7.25, with higher scores indicating stronger consensus.

While the sample size is modest and cannot claim to represent all civil society perspectives, the structured methodology and diverse participation provide a valuable snapshot of where consensus is emerging. The priorities identified should be understood as a starting point for further dialogue and research rather than a definitive ranking.

Poll Results: Civil Society Organisations’ Governance Priorities

The survey results revealed varying levels of consensus among civil society organisations regarding which governance mechanisms should be prioritised for immediate action. While all ten options were identified as valuable through the consultation process, their relative importance was ranked as follows:

Figure 1: Civil Society Organisations’ AI Governance Priorities by Consensus Score (Scale: 0-10)

In our weighted ranking system, a score of 10 would indicate unanimous agreement that an item should be the top priority, while a score of 0 would indicate that no organisation ranked that item at all. The actual range of scores (3.16 to 7.25) demonstrates that all items received meaningful support, with a clear stratification of priorities. The difference between consecutive scores indicates the relative strength of preference between items. You can find the original Sli.do results here.

- Establish legally binding “red lines” prohibiting certain high-risk or uncontrollable AI systems incompatible with human rights obligations. (7.25)

- Mandate systematic, independent third-party audits of general-purpose AI systems – covering bias, transparency, and accountability – and require rigorous verification of AI safety commitments with follow-up and penalties for noncompliance. (6.52)

- Establish crisis management frameworks and rapid-response security protocols to identify, mitigate, and contain urgent AI risks. (5.98)

- Enact robust whistleblower protections, shielding those who expose AI-related malpractices or safety violations from retaliation. (5.27)

- Ensure civil society, including Global South organisations, plays a strong role in AI governance via agenda-setting, expert groups, and oversight. (4.73)

- Support the global AI Safety and Security Institutes network, fostering shared research, auditing standards, and best practices across regions. (4.36)

- Publish periodic, independent global scientific reports on AI risks, real-world impacts, and emerging governance gaps. (4.21)

- Invest in large-scale “AI for Good” collaborations in sectors like health, climate, and education under transparent frameworks. (3.77)

- Incorporate sustainability, equity, and labor protections into AI governance structures. (3.57)

- Hold regular high-level AI Action Summits modeled on multilateral environmental conferences. (3.16)

This ranking should not be interpreted as suggesting that lower-ranked items are unimportant. In fact, all these governance mechanisms were already selected as priorities through the different consultation phases. Rather, it indicates where civil society organisations see the most urgent need for immediate action given limited policy resources and attention.

Analysis: A Framework for AI Governance in 2025

The survey results reveal a roadmap for 2025 with four interconnected dimensions. This framework moves beyond high-level principles to concrete implementation mechanisms.

Figure 2. A Roadmap for AI Governance in 2025

1. Enforcement Mechanisms

Participating civil society organisations demonstrated overwhelming consensus on legally binding measures, with enforcement mechanisms receiving the highest support across all priorities:

- Establish legally binding “red lines” prohibiting certain high-risk or uncontrollable AI systems incompatible with human rights obligations. (Highest ranked overall)

- Mandate systematic, independent third-party audits of general-purpose AI systems—covering bias, transparency, and accountability—and require rigorous verification of AI safety commitments with follow-up and penalties for noncompliance. (Second highest consensus)

Prof. Stuart Russell

President (pro tem), International Association for Safe and Ethical AI

The IDAIS-Beijing consensus statement previously identified specific examples, including prohibitions on autonomous replication, power-seeking behaviors, weapons of mass destruction development, harmful cyberattacks, and deception about capabilities – concrete applications that likely contributed to binding prohibitions emerging as the top priority in the civil society survey.

David Harris

Chancellor’s Public Scholar, University of California, Berkeley

2. Risk Management

Building on enforcement foundations, organisations showed strong consensus around complementary mechanisms to identify and address risks:

- Establishing crisis management frameworks for rapid response to urgent AI risks

- Protecting whistleblowers who expose AI-related violations

- Supporting the global network of AI Safety/Security Institutes

- Publishing periodic independent scientific reports on AI risks and impacts

These mechanisms work together: research identifies emerging risks, formal audits verify compliance, whistleblower protections enable the catching of issues that formal processes miss, and crisis protocols enable swift response when needed.

Dr Jess Whittlestone

Head of AI Policy, Centre for Long-Term Resilience (CLTR)

The whistleblower protection priority reflects growing recognition of its crucial role in the AI governance ecosystem. Karl Koch, founder of the non-profit focused on AI whistleblowing OAISIS/Third Opinion, calls these protections “a uniquely efficient tool to rapidly uncover and prevent risks at their source.” He advocates for measures that “shield individuals who disclose potential critical risks” while noting that regional progress is insufficient: “we need global coverage through, at a minimum, national laws, especially at the US federal level, in China, and in Israel.”

3. Inclusive Governance

For both enforcement and risk management to function effectively across diverse deployment contexts, governance requires varied perspectives:

- Strengthening civil society representation, particularly from the Global South

- Holding regular high-level AI Summits with multi-stakeholder involvement

Jhalak M. Kakkar

Executive Director, Centre for Communication Governance, National Law University in Delhi

The upcoming India AI Summit represents what Kakkar calls “a pivotal moment” to integrate inclusion throughout “the AI value chain and ecosystem,” embedding these principles in both global frameworks and local governance approaches.

4. Beneficial Applications

Complementing risk-focused governance, organisations emphasized directing AI toward positive outcomes:

- Investing in large-scale “AI for Good” collaborations in sectors like health and climate

- Incorporating sustainability, equity, and labor protections into governance frameworks

These priorities address a key strategic challenge identified by B Cavello, Director of Emerging Technologies at Aspen Digital: “People today do not feel like AI works for them. While we work to minimize potential harms from AI use, we must also get concrete about prioritizing progress on the things that matter to the public.” This legitimacy gap could undermine support for governance itself if not addressed.

B Cavello

Director of Emerging Technologies, Aspen Digital

This outcomes-oriented framing suggests beneficial applications should be governed through measurable impact metrics rather than aspirational principles.

Practical Applications

These priorities have direct implications for upcoming governance initiatives:

India AI Summit (November 2025)

With the India Summit (November 2025) fast approaching, there is an urgent opportunity to translate these priorities into action. Based on these priorities, policymakers preparing for the Summit should consider:

- Establishing technical working groups specifically focused on defining red lines and risk thresholds

- Establishing accountability mechanisms to verify that safety commitments made at previous summits have been fulfilled, with transparent reporting on progress and gaps

- Developing standardised methodologies for independent verification that can work across jurisdictional boundaries

- Creating crisis response protocols with clear intervention thresholds and coordinated response mechanisms

- Implementing transparent civil society participation mechanisms that ensure equitable representation

Global and Regional Implementation Frameworks

Beyond the India AI Summit, civil society AI governance priorities can directly support key regional and global governance processes entering critical implementation phases in 2025. Policymakers can enhance their effectiveness with the following concise recommendations:

- European Union (GPAI Code of Practice): Mandate independent audits covering bias, transparency, and accountability, with clear verification processes and penalties; establish robust whistleblower protections and crisis response protocols.

- OECD (AI Policy Observatory): Expand AI Incidents Monitor (AIM) for systematic risk tracking and rapid crisis response; regularly publish independent AI risk assessments.

- UNESCO (AI Ethics Recommendation): Operationalize ethical assessments (EIA) to proactively prevent high-risk AI applications; enhance civil society engagement, particularly from the Global South.

- UN Global Digital Compact: Establish regular global scientific AI risk assessments and ensure inclusive civil society participation, especially from the Global South.

- AI Safety and Security Institutes Network: Facilitate international collaboration on verification standards, crisis response frameworks, and share auditing outcomes broadly.

- NATO (AI Security and Governance): Implement NATO-aligned AI verification standards, robust whistleblower protections, and closely coordinate crisis protocols with EU and UN initiatives.

Conclusion: A Roadmap for Effective Governance

The priorities identified by civil society organisations provide a clear mandate for AI governance: binding prohibitions, independent verification, and crisis response frameworks. This four-dimensional roadmap – enforcement mechanisms, risk management systems, inclusive governance, and beneficial applications – offers concrete guidance for policymakers at both global and regional levels.

As AI capabilities advance rapidly, the window for establishing effective governance is narrowing. The upcoming India AI Summit and implementation of existing frameworks present critical opportunities to translate these priorities into action. Further research should expand civil society consultation to include more diverse voices, ensuring governance frameworks truly reflect global needs and perspectives. Replacing aspirational principles with concrete, enforceable mechanisms remains essential to ensuring AI development proceeds in ways that are safe, beneficial, and aligned with the public interest.

This analysis was produced by The Future Society and Renaissance Numérique.

We acknowledge the valuable contributions from the 44 civil society organisations, along with over 11,000 citizens, 200 expert organisations, and 61 participants of the AI Action Summit’s official side event, whose collective insights have significantly informed this priority-setting initiative.

-

Event

Global AI Governance: Empowering Civil Society

Tuesday 11th February 2025, from 9.00am to 12.00

Racine Avocats, 40 rue de Courcelles, 75008 Paris

-

News 5 February 2025

-

Publication 6 February 2025

AI Governance: Empowering Civil Society